From AI assistants doing deep analysis to autonomous autos making split-second navigation selections, AI adoption is exploding throughout industries.

Behind each a type of interactions is inference — the stage after coaching the place an AI mannequin processes inputs and produces outputs in actual time.

In the present day’s most superior AI reasoning fashions — able to multistep logic and complicated decision-making — generate far extra tokens per interplay than older fashions, driving a surge in token utilization and the necessity for infrastructure that may manufacture intelligence at scale.

AI factories are a method of assembly these rising wants.

However operating inference at such a big scale isn’t nearly throwing extra compute on the downside.

To deploy AI with most effectivity, inference have to be evaluated primarily based on the Suppose SMART framework:

- Scale and complexity

- Multidimensional efficiency

- Architecture and software program

- Return on funding pushed by efficiency

- Technology ecosystem and set up base

Scale and Complexity

As fashions evolve from compact functions to large, multi-expert programs, inference should hold tempo with more and more various workloads — from answering fast, single-shot queries to multistep reasoning involving thousands and thousands of tokens.

The increasing dimension and intricacy of AI fashions introduce main implications for inference, akin to useful resource depth, latency and throughput, vitality and prices, in addition to range of use instances.

To satisfy this complexity, AI service suppliers and enterprises are scaling up their infrastructure, with new AI factories coming on-line from companions like CoreWeave, Dell Applied sciences, Google Cloud and Nebius.

Multidimensional Efficiency

Scaling advanced AI deployments means AI factories want the pliability to serve tokens throughout a large spectrum of use instances whereas balancing accuracy, latency and prices.

Some workloads, akin to real-time speech-to-text translation, demand ultralow latency and a lot of tokens per person, straining computational sources for optimum responsiveness. Others are latency-insensitive and geared for sheer throughput, akin to producing solutions to dozens of advanced questions concurrently.

However hottest real-time situations function someplace within the center: requiring fast responses to maintain customers joyful and excessive throughput to concurrently serve as much as thousands and thousands of customers — all whereas minimizing value per token.

For instance, the NVIDIA inference platform is constructed to stability each latency and throughput, powering inference benchmarks on fashions like gpt-oss, DeepSeek-R1 and Llama 3.1.

What to Assess to Obtain Optimum Multidimensional Efficiency

- Throughput: What number of tokens can the system course of per second? The extra, the higher for scaling workloads and income.

- Latency: How shortly does the system reply to every particular person immediate? Decrease latency means a greater expertise for customers — essential for interactive functions.

- Scalability: Can the system setup shortly adapt as demand will increase, going from one to hundreds of GPUs with out advanced restructuring or wasted sources?

- Value Effectivity: Is efficiency per greenback excessive, and are these positive aspects sustainable as system calls for develop?

Structure and Software program

AI inference efficiency must be engineered from the bottom up. It comes from {hardware} and software program working in sync — GPUs, networking and code tuned to keep away from bottlenecks and benefit from each cycle.

Highly effective structure with out good orchestration wastes potential; nice software program with out quick, low-latency {hardware} means sluggish efficiency. The hot button is architecting a system in order that it might probably shortly, effectively and flexibly flip prompts into helpful solutions.

Enterprises can use NVIDIA infrastructure to construct a system that delivers optimum efficiency.

Structure Optimized for Inference at AI Manufacturing facility Scale

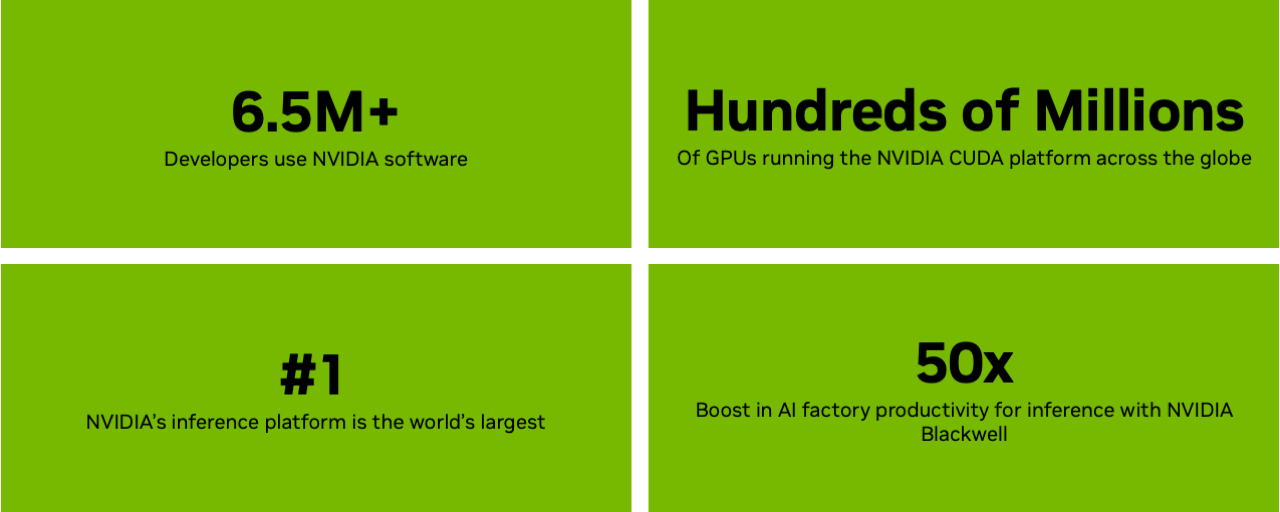

The NVIDIA Blackwell platform unlocks a 50x enhance in AI manufacturing unit productiveness for inference — that means enterprises can optimize throughput and interactive responsiveness, even when operating probably the most advanced fashions.

The NVIDIA GB200 NVL72 rack-scale system connects 36 NVIDIA Grace CPUs and 72 Blackwell GPUs with NVIDIA NVLink interconnect, delivering 40x increased income potential, 30x increased throughput, 25x extra vitality effectivity and 300x extra water effectivity for demanding AI reasoning workloads.

Additional, NVFP4 is a low-precision format that delivers peak efficiency on NVIDIA Blackwell and slashes vitality, reminiscence and bandwidth calls for with out skipping a beat on accuracy, so customers can ship extra queries per watt and decrease prices per token.

Full-Stack Inference Platform Accelerated on Blackwell

Enabling inference at AI manufacturing unit scale requires greater than accelerated structure. It requires a full-stack platform with a number of layers of options and instruments that may work in live performance collectively.

Trendy AI deployments require dynamic autoscaling from one to hundreds of GPUs. The NVIDIA Dynamo platform steers distributed inference to dynamically assign GPUs and optimize information flows, delivering as much as 4x extra efficiency with out value will increase. New cloud integrations additional enhance scalability and ease of deployment.

For inference workloads targeted on getting optimum efficiency per GPU, akin to rushing up massive combination of knowledgeable fashions, frameworks like NVIDIA TensorRT-LLM are serving to builders obtain breakthrough efficiency.

With its new PyTorch-centric workflow, TensorRT-LLM streamlines AI deployment by eradicating the necessity for handbook engine administration. These options aren’t simply highly effective on their very own — they’re constructed to work in tandem. For instance, utilizing Dynamo and TensorRT-LLM, mission-critical inference suppliers like Baseten can instantly ship state-of-the-art mannequin efficiency even on new frontier fashions like gpt-oss.

On the mannequin aspect, households like NVIDIA Nemotron are constructed with open coaching information for transparency, whereas nonetheless producing tokens shortly sufficient to deal with superior reasoning duties with excessive accuracy — with out rising compute prices. And with NVIDIA NIM, these fashions may be packaged into ready-to-run microservices, making it simpler for groups to roll them out and scale throughout environments whereas reaching the bottom complete value of possession.

Collectively, these layers — dynamic orchestration, optimized execution, well-designed fashions and simplified deployment — type the spine of inference enablement for cloud suppliers and enterprises alike.

Return on Funding Pushed by Efficiency

As AI adoption grows, organizations are more and more trying to maximize the return on funding from every person question.

Efficiency is the most important driver of return on funding. A 4x improve in efficiency from the NVIDIA Hopper structure to Blackwell yields as much as 10x revenue progress inside the same energy funds.

In power-limited information facilities and AI factories, producing extra tokens per watt interprets on to increased income per rack. Managing token throughput effectively — balancing latency, accuracy and person load — is essential for conserving prices down.

The business is seeing speedy value enhancements, going so far as lowering costs-per-million-tokens by 80% via stack-wide optimizations. The identical positive aspects are achievable operating gpt-oss and different open-source fashions from NVIDIA’s inference ecosystem, whether or not in hyperscale information facilities or on native AI PCs.

Expertise Ecosystem and Set up Base

As fashions advance — that includes longer context home windows, extra tokens and extra refined runtime behaviors — their inference efficiency scales.

Open fashions are a driving power on this momentum, accelerating over 70% of AI inference workloads at this time. They allow startups and enterprises alike to construct customized brokers, copilots and functions throughout each sector.

Open-source communities play a crucial position within the generative AI ecosystem — fostering collaboration, accelerating innovation and democratizing entry. NVIDIA has over 1,000 open-source tasks on GitHub along with 450 fashions and greater than 80 datasets on Hugging Face. These assist combine fashionable frameworks like JAX, PyTorch, vLLM and TensorRT-LLM into NVIDIA’s inference platform — guaranteeing most inference efficiency and suppleness throughout configurations.

That’s why NVIDIA continues to contribute to open-source tasks like llm-d and collaborate with business leaders on open fashions, together with Llama, Google Gemma, NVIDIA Nemotron, DeepSeek and gpt-oss — serving to carry AI functions from thought to manufacturing at unprecedented velocity.

The Backside Line for Optimized Inference

The NVIDIA inference platform, coupled with the Suppose SMART framework for deploying trendy AI workloads, helps enterprises guarantee their infrastructure can hold tempo with the calls for of quickly advancing fashions — and that every token generated delivers most worth.

Study extra about how inference drives the income producing potential of AI factories.

For month-to-month updates, join the NVIDIA Suppose SMART publication.