Within the newest MLPerf Inference V5.0 benchmarks, which mirror a few of the most difficult inference situations, the NVIDIA Blackwell platform set data — and marked NVIDIA’s first MLPerf submission utilizing the NVIDIA GB200 NVL72 system, a rack-scale resolution designed for AI reasoning.

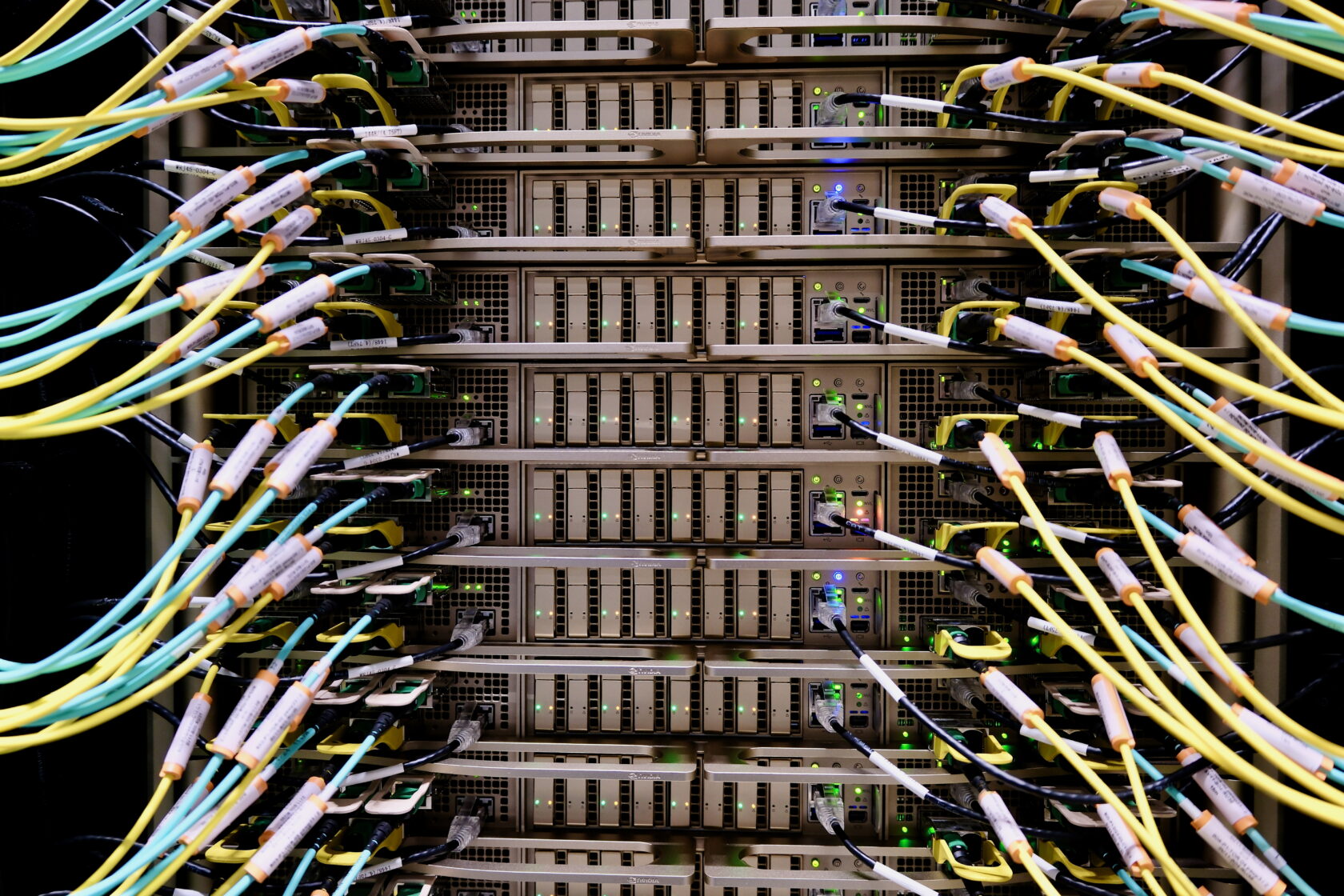

Delivering on the promise of cutting-edge AI takes a brand new type of compute infrastructure, known as AI factories. In contrast to conventional knowledge facilities, AI factories do greater than retailer and course of knowledge — they manufacture intelligence at scale by remodeling uncooked knowledge into real-time insights. The objective for AI factories is easy: ship correct solutions to queries rapidly, on the lowest price and to as many customers as potential.

The complexity of pulling this off is critical and takes place behind the scenes. As AI fashions develop to billions and trillions of parameters to ship smarter replies, the compute required to generate every token will increase. This requirement reduces the variety of tokens that an AI manufacturing facility can generate and will increase price per token. Maintaining inference throughput excessive and value per token low requires speedy innovation throughout each layer of the expertise stack, spanning silicon, community techniques and software program.

The newest updates to MLPerf Inference, a peer-reviewed business benchmark of inference efficiency, embody the addition of Llama 3.1 405B, one of many largest and most challenging-to-run open-weight fashions. The brand new Llama 2 70B Interactive benchmark options a lot stricter latency necessities in contrast with the unique Llama 2 70B benchmark, higher reflecting the constraints of manufacturing deployments in delivering the absolute best consumer experiences.

Along with the Blackwell platform, the NVIDIA Hopper platform demonstrated distinctive efficiency throughout the board, with efficiency rising considerably over the past yr on Llama 2 70B because of full-stack optimizations.

NVIDIA Blackwell Units New Information

The GB200 NVL72 system — connecting 72 NVIDIA Blackwell GPUs to behave as a single, large GPU — delivered as much as 30x greater throughput on the Llama 3.1 405B benchmark over the NVIDIA H200 NVL8 submission this spherical. This feat was achieved via greater than triple the efficiency per GPU and a 9x bigger NVIDIA NVLink interconnect area.

Whereas many corporations run MLPerf benchmarks on their {hardware} to gauge efficiency, solely NVIDIA and its companions submitted and revealed outcomes on the Llama 3.1 405B benchmark.

Manufacturing inference deployments typically have latency constraints on two key metrics. The primary is time to first token (TTFT), or how lengthy it takes for a consumer to start seeing a response to a question given to a giant language mannequin. The second is time per output token (TPOT), or how rapidly tokens are delivered to the consumer.

The brand new Llama 2 70B Interactive benchmark has a 5x shorter TPOT and 4.4x decrease TTFT — modeling a extra responsive consumer expertise. On this check, NVIDIA’s submission utilizing an NVIDIA DGX B200 system with eight Blackwell GPUs tripled efficiency over utilizing eight NVIDIA H200 GPUs, setting a excessive bar for this tougher model of the Llama 2 70B benchmark.

Combining the Blackwell structure and its optimized software program stack delivers new ranges of inference efficiency, paving the way in which for AI factories to ship greater intelligence, elevated throughput and sooner token charges.

NVIDIA Hopper AI Manufacturing facility Worth Continues Growing

The NVIDIA Hopper structure, launched in 2022, powers lots of at the moment’s AI inference factories, and continues to energy mannequin coaching. Via ongoing software program optimization, NVIDIA will increase the throughput of Hopper-based AI factories, resulting in better worth.

On the Llama 2 70B benchmark, first launched a yr in the past in MLPerf Inference v4.0, H100 GPU throughput has elevated by 1.5x. The H200 GPU, primarily based on the identical Hopper GPU structure with bigger and sooner GPU reminiscence, extends that enhance to 1.6x.

Hopper additionally ran each benchmark, together with the newly added Llama 3.1 405B, Llama 2 70B Interactive and graph neural community assessments. This versatility means Hopper can run a variety of workloads and preserve tempo as fashions and utilization situations develop tougher.

It Takes an Ecosystem

This MLPerf spherical, 15 companions submitted stellar outcomes on the NVIDIA platform, together with ASUS, Cisco, CoreWeave, Dell Applied sciences, Fujitsu, Giga Computing, Google Cloud, Hewlett Packard Enterprise, Lambda, Lenovo, Oracle Cloud Infrastructure, Quanta Cloud Expertise, Supermicro, Sustainable Metallic Cloud and VMware.

The breadth of submissions displays the attain of the NVIDIA platform, which is on the market throughout all cloud service suppliers and server makers worldwide.

MLCommons’ work to constantly evolve the MLPerf Inference benchmark suite to maintain tempo with the most recent AI developments and supply the ecosystem with rigorous, peer-reviewed efficiency knowledge is important to serving to IT determination makers choose optimum AI infrastructure.

Be taught extra about MLPerf.

Photos and video taken at an Equinix knowledge middle within the Silicon Valley.