Bodily AI — the engine behind fashionable robotics, self-driving vehicles and sensible areas — depends on a mixture of neural graphics, artificial information technology, physics-based simulation, reinforcement studying and AI reasoning. It’s a mixture well-suited to the collective experience of NVIDIA Analysis, a worldwide crew that for practically 20 years has superior the now-converging fields of AI and graphics.

That’s why at SIGGRAPH, the premier laptop graphics convention going down in Vancouver by means of Thursday, Aug. 14, NVIDIA Analysis leaders will ship a particular handle highlighting the graphics and simulation improvements enabling bodily and spatial AI.

“AI is advancing our simulation capabilities, and our simulation capabilities are advancing AI techniques,” mentioned Sanja Fidler, vp of AI analysis at NVIDIA. “There’s an genuine and highly effective coupling between the 2 fields, and it’s a mixture that few have.”

At SIGGRAPH, NVIDIA is unveiling new software program libraries for bodily AI — together with NVIDIA Omniverse NuRec 3D Gaussian splatting libraries for large-scale world reconstruction, updates to the NVIDIA Metropolis platform for imaginative and prescient AI in addition to NVIDIA Cosmos and NVIDIA Nemotron reasoning fashions. Cosmos Motive is a brand new reasoning imaginative and prescient language mannequin for bodily AI that allows robots and imaginative and prescient AI brokers to purpose like people utilizing prior information, physics understanding and customary sense.

Many of those improvements are rooted in breakthroughs by the corporate’s international analysis crew, which is presenting over a dozen papers on the present on developments in neural rendering, real-time path tracing, artificial information technology and reinforcement studying — capabilities that can feed the subsequent technology of bodily AI instruments.

How Bodily AI Unites Graphics, AI and Robotics

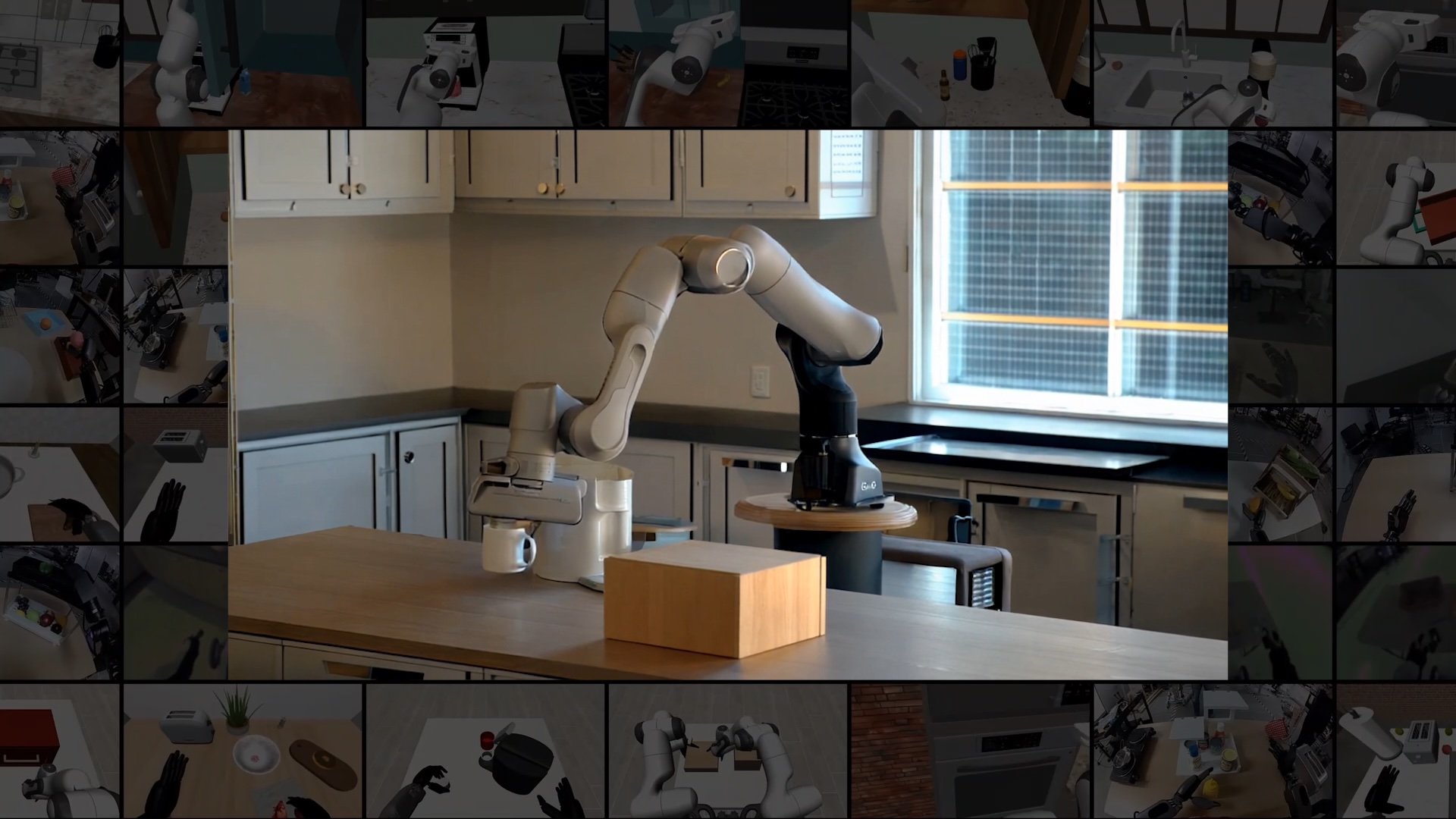

Bodily AI growth begins with the development of high-fidelity, bodily correct 3D environments. With out these lifelike digital environments, builders can’t prepare superior bodily AI techniques corresponding to humanoid robots in simulation, as a result of the talents the robots would study in digital coaching wouldn’t translate effectively sufficient to the true world.

Image an agricultural robotic utilizing the precise quantity of strain to choose peaches off bushes with out bruising them, or a producing robotic assembling microscopic digital parts on a machine the place each millimeter issues.

“Bodily AI wants a digital surroundings that feels actual, a parallel universe the place the robots can safely study by means of trial and error,” mentioned Ming-Yu Liu, vp of analysis at NVIDIA. “To construct this digital world, we’d like real-time rendering, laptop imaginative and prescient, bodily movement simulation, 2D and 3D generative AI, in addition to AI reasoning. These are the issues that NVIDIA Analysis has spent practically 20 years to be good at.”

NVIDIA’s legacy of breakthrough analysis in ray tracing and real-time laptop graphics, relationship again to the analysis group’s inception in 2006, performs a essential function in enabling the realism that bodily AI simulations demand. A lot of that rendering work, too, is powered by AI fashions — a discipline often called neural rendering.

“Our core rendering analysis fuels the creation of true-to-reality digital phrases used to coach superior bodily AI techniques, whereas AI is in flip serving to us create these 3D worlds from photos,” mentioned Aaron Lefohn, vp of graphics analysis and head of the Actual-Time Graphics Analysis group at NVIDIA. “We’re now at a degree the place we will take footage and movies — an accessible type of media that anybody can seize — and quickly reconstruct them into digital 3D environments.”