B2B corporations are all the time looking out to optimize their {hardware} structure to assist the manufacturing of AI-powered software program.

However investing in generative AI infrastructure will be tough. You must be conscious of issues round integration with legacy programs, {hardware} provisioning, ML framework assist, computational energy, and a transparent onboarding roadmap.

Curious to grasp what steps needs to be taken to be able to strengthen generative AI infrastructure maturity, I got down to consider the finest generative AI infrastructure software program.

My main objective was to empower companies to spend money on good AI progress, adhere to AI content material litigation, make the most of ML mannequin frameworks, and enhance transparency and compliance.

Under is my detailed analysis of the perfect generative AI infrastructure, together with proprietary G2 scores, real-time consumer opinions, top-rated options, and professionals and cons that can assist you spend money on rising your AI footprint in 2025.

6 finest Generative AI Infrastructure Software program in 2025: my prime picks

1. Vertex AI: Finest for NLP workflows and pre-built ML algorithms:

For robust pure language processing (NLP), multilingual assist, and seamless integration with Google’s ecosystem.2. AWS Bedrock: Finest for multi-model entry and AWS cloud integration

For entry to quite a lot of basis fashions (like Anthropic, Cohere, and Meta), with full AWS integration.

3. Google Cloud AI Infrastructure: Finest for scalable ML pipelines and TPU assist

For {custom} AI chips (TPUs), distributed coaching skills, and ML pipelines.

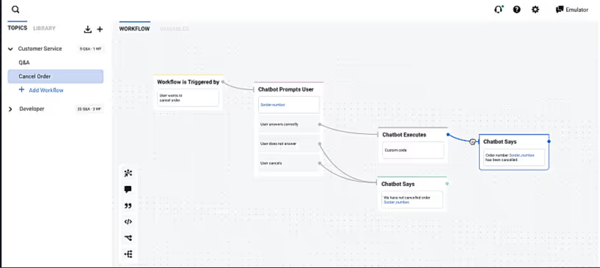

4. Botpress: Finest for AI-powered chat automation with human handoff:

For enterprise-grade stability, quick mannequin inferences, and role-based entry management.

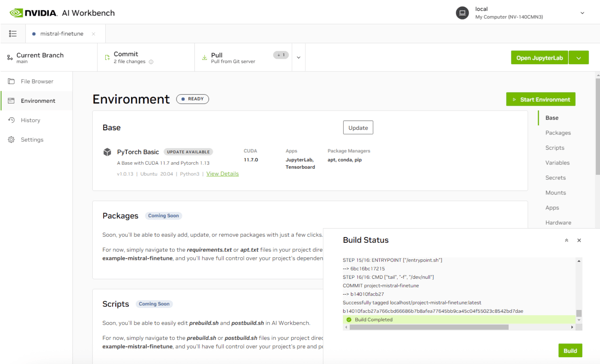

5. Nvidia AI Enterprise: Finest for high-performance mannequin AI coaching:

For assist for giant neural networks, language instruments, and pre-built ML environments, ultimate for knowledge science groups.

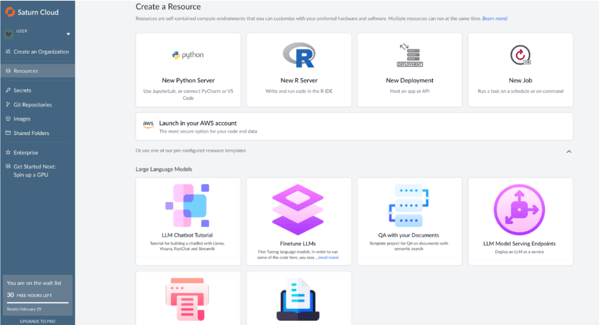

6. Saturn Cloud: Finest for scalable Python and AI growth:

For giant neural networks, language instruments, and pre-built ML environments, ultimate for knowledge science and AI analysis groups.

Other than my very own evaluation, these generative AI infrastructure software program are rated as prime options in G2’s Grid Report. I’ve included their standout options for straightforward comparability. Pricing is obtainable on request for many options.

6 finest Generative AI Infrastructure software program I strongly suggest

Generative AI infrastructure software program powers the event, deployment, and scaling of fashions like LLMs and diffusion fashions. It gives computing assets, ML orchestration, mannequin administration, and developer instruments to streamline AI workflows.

I discovered these instruments useful for dealing with backend complexity, coaching, fine-tuning, inference, and scaling, so groups can construct and run generative AI functions effectively. Other than this, additionally they provide pre-trained fashions, APIs, and instruments for efficiency, security, and observability

Earlier than you spend money on a generative AI platform, consider its integration capabilities, knowledge privateness insurance policies, and knowledge administration options. Be conscious that because the instruments eat excessive GPU/TPU, they should align with computational assets, {hardware} wants, and tech stack compatibility.

How did I discover and consider the perfect generative AI infrastructure software program?

I spent weeks attempting, testing, and evaluating the perfect generative AI infrastructure software program, which gives AI-generated content material verification, vendor onboarding, safety and compliance, price, and ROI certainty for SaaS corporations investing in their very own LLMs or generative AI instruments.

I used AI by factoring in real-time consumer opinions, highest-rated options, professionals and cons, and pricing for every of those software program distributors. By summarising the important thing sentiments and market knowledge for these instruments, I intention to current an unbiased tackle the perfect generative AI infrastructure software program in 2025.

In instances the place I couldn’t enroll and entry the instrument myself, I consulted verified market analysis analysts with a number of years of hands-on expertise to guage and analyze instruments and shortlist them as per your corporation necessities. With their exhaustive experience and real-time buyer suggestions by way of G2 opinions, this listing of generative AI infrastructure instruments will be actually helpful for B2B companies investing in AI and ML progress.

The screenshots used on this listicle are a mixture of these taken from the product profiles of those software program distributors and third-party web site sources to maximise the extent of transparency and precision to make a data-driven resolution.

Whereas your ML and knowledge science groups might already be utilizing AI instruments, the scope of generative AI is increasing quick into inventive, conversational, and automatic domains.

In truth, based on G2’s 2024 State of Software program report, each AI product that noticed probably the most profile visitors within the final 4 quarters on G2 has some form of generative AI element embedded in it.

This reveals that companies now wish to custom-train fashions, spend money on autoML, and earn AI maturity to customise their normal enterprise operations.

What makes a Generative AI Infrastructure Software program price it: my opinion

In accordance with me, a super generative AI infrastructure instrument has predefined AI content material insurance policies, authorized and compliance frameworks, {hardware} and software program compatibility, and end-to-end encryption and consumer management.

Regardless of issues concerning the monetary implications of adopting AI-powered expertise, many industries stay dedicated to scaling their knowledge operations and advancing their cloud AI infrastructure. In accordance with a examine by S&P World, 18% of organizations have already built-in generative AI into their workflows. Nevertheless, 35% reported abandoning AI initiatives prior to now 12 months as a consequence of funds constraints. Moreover, 21% cited an absence of govt assist as a barrier, whereas 18% pointed to insufficient instruments as a significant problem.

With no outlined system to analysis and shortlist generative AI infrastructure instruments, it’s a enormous wager on your knowledge science and machine studying groups to shortlist a viable instrument. Under are the important thing standards your groups can look out for to operationalize your AI growth workflows:

- Scalable pc orchestration with GPU/TPU assist: After evaluating dozens of platforms, one standout differentiator in the perfect instruments was the power to dynamically scale compute assets, particularly these optimized for GPU and TPU workloads. It issues as a result of the success of gen AI depends upon speedy iteration and high-throughput coaching. Patrons ought to prioritize options that assist distributed coaching, autoscaling, and fine-grained useful resource scheduling to attenuate downtime and speed up growth.

- Enterprise-grade safety with compliance frameworks: I seen a stark distinction between platforms that merely “listing” compliance and people who embed it into their infrastructure design. The latter group gives native assist for GDPR, HIPAA, SOC 2, and extra, with granular knowledge entry controls, audit trails, and encryption at each layer. For patrons within the regulated industries or dealing with PII, overlooking isn’t simply dangerous, it’s a dealbreaker. Which is why my focus was on platforms that deal with safety as a foundational pillar, not only a advertising and marketing prerequisite.

- First-class assist for fine-tuning and {custom} mannequin internet hosting capabilities: Some platforms solely provide plug-and-play entry to basis fashions, however probably the most future-ready instruments that I evaluated supplied strong workflows for importing, fine-tuning, and deploying your {custom} LLMs. I prioritized this function as a result of it offers groups extra management over mannequin conduct, permits domain-specific optimization, and ensures higher efficiency for real-world use instances the place out-of-the-box fashions typically fall quick.

- Plug-and-play integrations for actual enterprise knowledge pipelines: I discovered that if a platform doesn’t combine properly, it received’t scale. The most effective instrument comes with pre-built connectors for frequent enterprise knowledge sources, like Snowflake, Databricks, and BigQuery, and helps API requirements like REST, Webhooks, and GRPC. Patrons ought to search for infrastructure that simply plugs into present knowledge and MLOps stacks. This reduces setup friction and ensures a quicker path to manufacturing AI.

- Clear and granular price metering and forecasting instruments: Gen AI can get costly, quick. The instruments that stand out to me present detailed dashboards for monitoring useful resource utilization (GPU hours, reminiscence, bandwidth), together with forecasting options to assist budget-conscious patrons predict price beneath completely different load situations. If you’re a stakeholder accountable for justifying ROI, this sort of visibility is invaluable. Prioritize platforms that allow you to monitor utilization of the mannequin, consumer, and challenge ranges to remain in management.

- Multi-cloud or hybrid growth flexibility: Vendor lock-in is an actual concern on this area. Essentially the most enterprise-ready platforms I reviewed supported versatile deployment choices, together with AWS, Azure, GCP, and even on-premise by way of Kubernetes or naked steel. This ensures enterprise continuity, helps meet knowledge residency necessities, and permits IT groups to architect round latency or compliance constraints. Patrons aiming for resilience and long-term scale ought to demand multi-cloud compatibility from day one.

As extra companies delve into customizing and adopting LLM to automate their normal working processes, AI maturity and infrastructure are pivotal issues for seamless and environment friendly knowledge utilization and pipeline constructing.

In accordance with a State of AI infrastructure report by Flexential, 70% of companies are devoting at the least 10% of their complete IT budgets to AI initiatives, together with software program, {hardware}, and networking.

This actually attests to the eye companies have been paying to infrastructure wants like {hardware} provisioning, distributed processing, latency, and MLOps automation for managing AI stacks.

Out of the 40+ instruments that I scoured, I shortlisted the highest 6 generative AI infrastructure instruments that encourage authorized insurance policies, proprietary knowledge dealing with, and AI governance very properly. To be included within the generative AI infrastructure class, a software program should:

- Present scalable choices for mannequin coaching and inference

- Provide a clear and versatile pricing mannequin for computational assets and API calls

- Allow safe knowledge dealing with by way of options like knowledge encryption and GDPR compliance

- Assist simple integration into present knowledge pipelines and workflows, ideally by way of APIs or pre-built connectors.

*This knowledge was pulled from G2 in 2025. Some opinions might have been edited for readability.

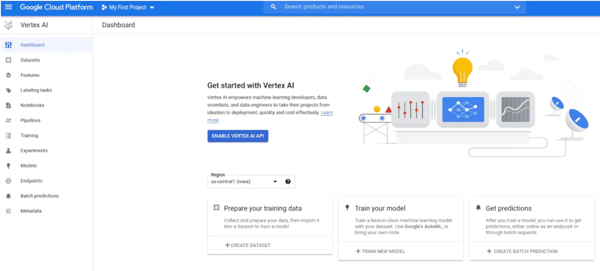

1. Vertex AI: Finest for NLP workflows and pre-built ML algorithms

Vertex AI helps you automate, deploy, and publish your ML scripts right into a dwell setting straight from a pocket book deployment. It gives ML frameworks, {hardware} versioning, compatibility, latency, and AI authorized coverage frameworks to customise and optimize your AI era lifecycle.

Vertex AI accelerates your AI-powered growth workflows and is trusted by most small, mid, and enterprise companies. With a buyer satisfaction rating of 100 and 97% of customers ranking it 4 out of 5 stars, it has gained immense recognition amongst organizations trying to scale their AI operations.

What pulled me in on Vertex AI is how effortlessly it integrates with the broader Google Cloud ecosystem. It looks like all the things’s linked: knowledge prep, mannequin coaching, deployment, multi function workflow.

Utilizing Vertex AI’s Gen AI Studio, you possibly can simply entry each first-party and third-party fashions. You may spin up LLMs like PaLM or open-source fashions by way of mannequin gardens to make experimenting tremendous versatile. Plus, the pipeline UI’s drag-and-drop assist and built-in notebooks assist optimize the end-to-end course of.

One of many premium options I relied on closely is the managed notebooks and coaching pipelines. They provide critical compute energy and scalability. It’s cool how I can use pre-built containers, make the most of Google’s optimized TPU/V100 infrastructure, and simply give attention to my mannequin logic as an alternative of wrangling infra.

Vertex AI additionally offers Triton inference server support, which is a large win for environment friendly mannequin serving. And let’s not overlook Vertex AI Search and Dialog. These options have turn into indispensable for constructing domain-specific LLMs and retrieval-augmented era apps with out getting tangled in backend complexity.

The G2 evaluate knowledge clearly reveals that customers actually recognize the convenience of use. Individuals like me are particularly drawn to the intuitive UI.

Some G2 opinions additionally discuss how simple it’s to migrate from Azure to Vertex AI. G2 reviewers constantly spotlight the platform’s clear design, robust mannequin deployment instruments, and the facility of Vertex Pipelines. A number of even identified that the GenAI choices give a “course-like” really feel, like having your personal AI studying lab constructed into your challenge workspace.

However not all the things is ideal, and I’m not the one one who thinks so. A number of G2 reviewers level out that whereas Vertex AI is extremely highly effective, the pay-as-you-go pricing can get costly quick, particularly for startups or groups operating lengthy experiments. That stated, others recognize that the built-in AutoML and ready-to-deploy fashions assist save time and cut back dev effort general.

There’s additionally a little bit of a studying curve. G2 consumer insights point out that establishing pipelines or integrating with instruments like BigQuery can really feel overwhelming at first. Nonetheless, when you’re up and operating, the power to handle your full ML workflow in a single place is a game-changer, as highlighted by a number of G2 buyer reviewers.

Whereas Vertex AI’s documentation is first rate in locations, a number of verified reviewers on G2 discovered it inconsistent, particularly when working with options like {custom} coaching or Vector Search. That stated, many additionally discovered the platform’s assist and neighborhood assets useful in filling these gaps.

Regardless of these hurdles, Vertex AI continues to impress with its scalability, flexibility, and production-ready options. Whether or not you’re constructing quick prototypes or deploying strong LLMs, it equips you with all the things you should construct confidently.

What I like about Vertex AI:

- Vertex AI unifies the complete ML workflow, from knowledge prep to deployment, on one platform. AutoML and seamless integration with BigQuery make mannequin constructing and knowledge dealing with simple and environment friendly.

- Vertex AI’s user-friendly, environment friendly framework makes mannequin constructing and implementation simple. Its streamlined integration helps obtain objectives with minimal steps and most influence.

What do G2 Customers like about Vertex AI:

“The most effective factor I like is that Vertex AI is a spot the place I can carry out all my machine-learning duties in a single place. I can construct, prepare, and deploy all my fashions with out switching to some other instruments. It’s tremendous snug to make use of, saves time, and retains my workflow easy. Essentially the most useful one is I may even prepare and deploy complicated fashions and it really works very properly with BigQuery which lets me automate the mannequin course of and make predictions. Vertex AI is tremendous versatile to carry out AutoML and {custom} coaching.”

– Vertex AI Evaluation, Triveni J.

What I dislike about Vertex AI:

- It may turn into fairly pricey, particularly with options like AutoML, which may drive up bills shortly. Regardless of appearances, it’s not as plug-and-play because it appears.

- In accordance with G2 reviewers, whereas documentation is useful, it may be prolonged for inexperienced persons, and jobs like creating pipelines require extra technical data.

What do G2 customers dislike about Vertex AI:

“Whereas Vertex AI is highly effective, there are some things that could possibly be higher. The pricing can add up shortly in case you are not cautious with the assets you employ, particularly with large-scale coaching jobs. The UI is clear, however generally navigating between completely different elements like datasets, fashions, and endpoints feels clunky. Some components of the documentation felt a bit too technical.”

– Vertex AI Evaluation, Irfan M.

Discover ways to scale your scripting and coding tasks and take your manufacturing to the following stage with the 9 finest AI code turbines in 2025, analysed by my peer SudiptoPaul.

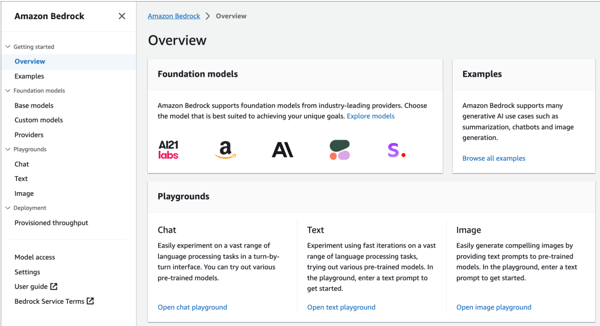

2. AWS Bedrock: Finest for multi-model entry and AWS cloud integration

AWS Bedrock is an environment friendly generative AI and cloud orchestration instrument that lets you work with foundational fashions in a hybrid setting and generate environment friendly generative AI functions in a versatile and clear means.

As evidenced by G2 knowledge, AWS Bedrock has acquired a 77% market presence rating and a 100% ranking from customers who gave it a 4 out of 5 stars, indicating its reliability and agility within the generative AI area.

After I first began utilizing AWS Bedrock, what stood out instantly was how easily it built-in with the broader AWS ecosystem. It felt native-like it belonged proper alongside my present cloud instruments. I didn’t have to fret about provisioning infrastructure or juggling APIs for each mannequin I needed to check. It’s actually refreshing to have that stage of plug-and-play functionality, particularly when working throughout a number of basis fashions.

What I like most is the number of fashions accessible out of the field. Whether or not it’s Anthropic’s Claude, Meta’s LLaMA, or Amazon’s personal Titan fashions, I might simply change between them for various use instances. This model-agnostic strategy meant I wasn’t locked into one vendor, which is a big win once you’re attempting to benchmark or A/B take a look at for high quality, pace, or price effectivity. Plenty of my retrieval-augmented era (RAG) experiments carried out properly right here, due to Bedrock’s embedding-based retrieval capabilities, which actually reduce down my time constructing pipelines from scratch.

The interface is beginner-friendly, which was stunning given AWS’s fame for being a bit complicated. With Bedrock, I might prototype an app with out diving into low-level code. For somebody who’s extra targeted on outcomes than infrastructure, that’s gold. Plus, since all the things lives inside AWS, I didn’t have to fret about safety and compliance; it inherited the maturity and tooling of AWS’s cloud platform.

Now, right here’s the factor, each product has its quirks. Bedrock delivers strong infrastructure and mannequin flexibility, however G2 consumer insights flag some confusion round pricing. A number of G2 reviewers talked about sudden prices when scaling inference, particularly with token-heavy fashions. Nonetheless, many appreciated the power to decide on fashions that match each efficiency and funds wants.

Integration with AWS is easy, however orchestration visibility could possibly be stronger. In accordance with G2 buyer reviewers, there’s no built-in option to benchmark or visually monitor mannequin sequences. That stated, additionally they praised how simple it’s to run multi-model workflows in comparison with handbook setups.

Getting began is fast, however customization and debugging are restricted. G2 reviewers famous challenges with fine-tuning personal fashions or troubleshooting deeply. Even so, customers constantly highlighted the platform’s low-friction deployment and reliability in manufacturing.

The documentation is strong for primary use instances, however a couple of G2 consumer insights referred to as out gaps in superior steerage. Regardless of that, reviewers nonetheless favored how intuitive Bedrock is for shortly getting up and operating.

Total, AWS Bedrock gives a strong, versatile GenAI stack. Its few limitations are outweighed by its ease of use, mannequin selection, and seamless AWS integration.

What I like about AWS Bedrock:

- The Agent Builder is tremendous useful. You may construct and take a look at brokers shortly with out having to cope with a posh setup.

- AWS Bedrock accommodates all LLM fashions, which helps you select the fitting mannequin for the fitting use case.

What do G2 Customers like about AWS Bedrock:

“AWS Bedrock accommodates all LLM fashions, which is useful to decide on the fitting mannequin for the use instances. I constructed a number of Brokers that assist beneath the software program growth lifecycle, and by utilizing Bedrock, I used to be in a position to obtain the output quicker. Additionally, the safety features supplied beneath Bedrock actually assist to construct chatbots and cut back errors or hallucinations for textual content era and digital assistant use instances.”

– AWS Bedrock Evaluation, Saransundar N.

What I dislike about AWS Bedrock:

- If a product is not prepared in AWS ecosystem, then utilizing Bedrock can result in a possible vendor lock in. And for very area of interest situations, a number of tweaking is required.

- In accordance with G2 opinions, Bedrock has a steep preliminary studying curve regardless of strong documentation.

What do G2 customers dislike about AWS Bedrock:

“AWS Bedrock will be pricey, particularly for small companies, and it ties customers tightly to the AWS ecosystem, limiting flexibility. Its complexity poses challenges for newcomers, and whereas it gives foundational fashions, it’s much less adaptable than open-source choices. Moreover, the documentation isn’t all the time user-friendly, making it more durable to rise up to hurry shortly.”

– AWS Bedrock Evaluation, Samyak S.

In search of a instrument to flag redundant or ambiguous AI content material? Try prime AI detectors in 2025 to unravel unethical automation well.

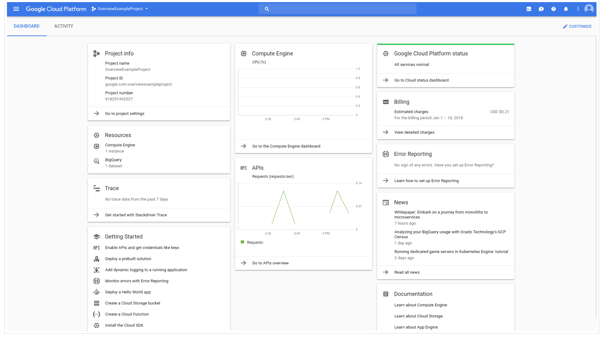

3. Google Cloud AI Infrastructure: Finest for scalable ML pipelines and TPU assist

Google Cloud AI Infrastructure is a scalable, versatile, and agile generative AI infrastructure platform that helps your LLM operations, mannequin administration for knowledge science and machine studying groups. It gives high-performance computational energy to run, handle, and deploy your closing AI code into manufacturing.

Based mostly on G2 opinions, Google Cloud AI Infrastructure constantly receives a excessive buyer satisfaction rating. With 100% of customers ranking it 4 out of 5 stars throughout small, mid, and enterprise market segments, this turns into an easy-to-use and cost-efficient generative AI platform that gives appropriate operationalization on your AI-powered instruments.

What actually strikes me is how seamless and scalable the platform is, particularly when coping with large-scale ML fashions. From knowledge preprocessing to coaching and deployment, all the things flows easily. The platform handles each deep studying and classical ML workloads very well, with robust integration throughout providers like Vertex AI, BigQuery, and Kubernetes.

One of many standout features is the efficiency. Once you’re spinning up {custom} TPU or GPU VMs, the compute energy is there once you want it, no extra ready round for jobs to queue. This sort of flexibility is gold for groups managing high-throughput coaching cycles or real-time inferencing.

I personally discovered its high-performance knowledge pipelines helpful once I wanted to coach a transformer mannequin on huge datasets. Pair that with instruments like AI Platform Coaching and Prediction, and also you get an end-to-end workflow that simply is smart.

One other factor I like is the integration throughout Google Cloud’s ecosystem. Whether or not I’m leveraging AutoML for quicker prototyping or orchestrating workflows by way of Cloud Features and Cloud Run, all of it simply works.

And Kubernetes assist is phenomenal. I’ve run hybrid AI/ML workloads with Google Kubernetes Engine (GKE), which is tightly coupled with Google Cloud’s monitoring and safety stack, so managing containers by no means looks like a burden.

Whereas the platform gives a seamless and scalable expertise for giant AI/ML fashions, a number of G2 reviewers observe that the educational curve will be steep, particularly for groups with out prior expertise with cloud-based ML infrastructure. That stated, when you get the cling of it, the big selection of instruments and providers turns into extremely highly effective.

G2 customers have praised the pliability of Google Cloud’s compute assets, however some buyer reviewers point out that assist responsiveness will be slower than anticipated throughout vital moments. Nonetheless, the documentation and neighborhood assets typically fill within the gaps properly for many troubleshooting wants.

The AI infrastructure integrates superbly with different Google Cloud providers, making workflows extra environment friendly. Nevertheless, G2 consumer insights point out that managing price visibility and billing complexities could be a problem with out diligent monitoring. Fortunately, options like per-second billing and sustained use reductions assist optimize spend when used successfully.

Google Cloud offers spectacular energy and efficiency with instruments like TPU and {custom} ML pipelines. That stated, a couple of G2 consumer reviewers level out that simplifying structure and configuration, particularly for newcomers, might make onboarding smoother. Even so, as soon as groups acclimate, the platform proves itself with dependable, high-throughput coaching capabilities.

G2 reviewers strongly reward the infrastructure’s dealing with of high-volume workloads. Nonetheless, some customers have noticed that the UI and sure console capabilities may gain advantage from a extra intuitive design. But, regardless of this, the consistency and safety throughout providers proceed to earn the belief of enterprise customers.

What I like about Google Cloud AI Infrastructure:

- Google Cloud AI frequently boosts reasoning and efficiency throughout large-scale AI fashions. I like the way it simplifies orchestration utilizing specialised cloud assets to boost effectivity and cut back complexity.

- Cloud AI Infrastructure permits you to select the fitting processing energy, like GPUs or TPUs, on your AI wants. It is simple to make use of and seamlessly integrates with Vertex AI for managed deployments.

What do G2 Customers like about Google Cloud AI Infrastructure:

“Integration is each simple to make use of and extremely helpful, streamlining my workflow and boosting effectivity. The interface is pleasant, and a steady connection ensures easy communication. Total consumer expertise is nice. Assist is useful and ensures any points are shortly resolved. There are various assets accessible for brand spanking new customers, too.”

– Google Cloud AI Infrastructure Evaluation, Shreya B.

What I dislike about Google Cloud AI Infrastructure:

- Whereas the general expertise is easy and highly effective, there’s a hole in native language assist. Increasing this could make an already useful gizmo much more accessible to various consumer bases.

- Some customers really feel that the consumer expertise and buyer assist could possibly be extra participating and responsive

What do G2 customers dislike about Google Cloud AI Infrastructure:

“It is a steep studying curve, price, and sluggish assist, ” I may say.”

– Google Cloud AI Infrastructure Evaluation, Jayaprakash J.

4. Botpress: Finest for AI-powered chat automation with human handoff

Botpress gives a low-code/no-code framework that helps you monitor, run, deploy, create, or optimize your AI brokers and deploy them on a number of software program ecosystems to offer a supreme buyer expertise.

With Botpress, you possibly can reinforce fast AI automation, mannequin era, and validation, and fine-tune your LLM workflows with out impacting your community bandwidth.

With an general buyer satisfaction rating of 66 on G2, Botpress is more and more getting extra visibility and a spotlight as a versatile gen AI answer. Additional, 100% of customers gave it a 4-star ranking for displaying excessive AI power effectivity and GDPR adherence.

What actually pulled me in at first was how intuitive the visible movement builder is. Even in case you’re not tremendous technical, you can begin crafting subtle bots due to its low-code interface.

However what makes it shine is that it doesn’t cease there. In case you’re a developer, the ProCode capabilities allow you to dive deeper, creating logic-heavy workflows and {custom} modules with fine-grained management. I particularly appreciated the power to make use of native database searches in pure language and the versatile transitions; it genuinely looks like you possibly can mildew the bot’s mind nevertheless you need.

Considered one of my favourite features is how seamlessly Botpress integrates with present instruments. You may join it to varied providers throughout the stack, from CRMs to inner databases, with out a lot trouble.

You may deploy customer support bots throughout a number of channels like net, Slack, and MS Groups seamlessly. And it’s not only a chatbot; it’s an automation engine. I’ve used it to construct bots that serve each customer-facing and inner use instances. The data base capabilities, notably when paired with embeddings and vector search, flip the bot right into a genuinely useful assistant.

Now, let’s discuss the tiered plans and premium options. Even on the free tier, you get beneficiant entry to core functionalities like movement authoring, channel deployment, and testing. However as soon as you progress into the Skilled and Enterprise plans, you get options like personal cloud or on-prem deployment, superior analytics, role-based entry management (RBAC), and {custom} integrations.

The enterprise-grade observability instruments and extra granular chatbot conduct monitoring are an enormous plus for groups operating vital workflows at scale. I particularly appreciated the premium NLP fashions and extra token limits that allowed for extra nuanced and expansive dialog dealing with. These have been important when our bot scaled as much as deal with excessive visitors and bigger data bases.

Botpress is clearly heading in the right direction. G2 buyer reviewers often point out how the platform retains evolving with frequent updates and a responsive dev group. However there are some points.

One situation I’ve seen throughout heavier utilization is occasional efficiency lag. It isn’t a deal-breaker by any means, and fortunately, it doesn’t occur typically, but it surely’s one thing G2 reviewers have echoed, particularly when dealing with excessive visitors or operating extra complicated workflows. Nonetheless, the platform has scaled impressively over time, and with every launch, issues really feel smoother and extra optimized.

One other space the place I’ve needed to be a bit extra hands-on is the documentation. Whereas there’s loads of content material to get began, together with some improbable video walkthroughs, extra technical examples for edge instances would assist. G2 consumer insights counsel others have additionally leaned on the Botpress neighborhood or trial-and-error when diving into superior use instances.

And sure, there’s a little bit of a studying curve. However actually, that’s anticipated when a instrument gives this a lot management and customization. G2 reviewers who’ve frolicked exploring deeper layers of the platform point out the identical: Preliminary ramp-up takes time, however the payoff is substantial. The built-in low-code tooling helps flatten that curve lots quicker than you’d assume.

Even with a couple of quirks, I discover myself constantly impressed. Botpress offers the inventive management to construct precisely what you want, whereas nonetheless supporting a beginner-friendly setting. G2 sentiment displays this steadiness; customers recognize the facility as soon as they’re up to the mark, and I couldn’t agree extra.

What I like about Botpress:

- Botpress is each highly effective and user-friendly. I additionally liked that they’ve a big consumer base on Discord, the place the neighborhood overtly helps one another.

- I favored the mixture of LowCode and ProCode and the integrations of assorted instruments accessible to construct RAG-based chatbots shortly.

What do G2 Customers like about Botpress:

“The flexibleness of the product and its capability to unravel a number of issues in a brief growth cycle are revolutionary. The benefit of implementation is such that enterprise customers can spin up their very own bots. Its capability to combine with different platforms expands the aptitude of the platform considerably.”

– Botpress Evaluation, Ravi J.

What I dislike about Botpress:

- Generally, combining autonomous and normal nodes results in infinite loops, and there’s no simple option to cease them. Collaborative modifying can be glitchy, with adjustments not all the time saving correctly.

- In accordance with G2 reviewers, a draw back of self-hosting is that it may be complicated and require technical experience for setup and upkeep.

What do G2 customers dislike about Botpress:

“If you’re not the form of one that reads or watches movies to study, then you definately may not be capable to catch up. Sure, it is very simple to arrange, however if you wish to construct a extra complicated AI bot, there are issues you should dig deeper into; therefore, there are some studying curves.”

– Botpress Evaluation, Samantha W.

5. Nvidia AI Enterprise: Finest for high-performance mannequin AI coaching

Nvidia AI Enterprise gives steadfast options to assist, handle, mitigate, and optimize the efficiency of your AI processes and offer you pocket book automation to fine-tune your script era skills.

With Nvidia AI, you possibly can run your AI fashions in a suitable built-in studio setting and embed AI functionalities into your dwell tasks with API integration to construct better effectivity.

In accordance with G2 knowledge, Nvidia is a powerful contender within the gen AI area, with over 90% of customers prepared to suggest it to friends and 64% of companies contemplating it actively for his or her infrastructure wants. Additionally, round 100% of customers have rated it 4 out of 5 stars, hinting on the product’s robust operability and robustness.

What I like most is how seamlessly it bridges the hole between {hardware} acceleration and enterprise-ready AI infrastructure. The platform gives deep integration with Nvidia GPUs, and that is an enormous plus; coaching fashions, fine-tuning, and inferencing are all optimized to run lightning-fast. Whether or not I’m spinning up a mannequin on an area server or scaling up throughout a hybrid cloud, the efficiency stays constantly excessive.

One of many standout issues for me has been the flexibility. Nvidia AI Enterprise doesn’t lock me right into a inflexible ecosystem. It’s suitable with main ML frameworks like TensorFlow, PyTorch, and RAPIDS, and integrates superbly with VMware and Kubernetes environments. That makes deployment means much less of a headache, particularly in manufacturing situations the place stability and scalability are non-negotiable.

It additionally consists of pre-trained fashions and instruments like NVIDIA TAO Toolkit, which saves me from reinventing the wheel each time I begin a brand new challenge.

The UI/UX is fairly intuitive, too. I didn’t want weeks of onboarding to get snug. The documentation is wealthy and well-organized, and there’s a transparent effort to make issues “enterprise-grade” with out being overly complicated.

Options like optimized GPU scheduling, knowledge preprocessing pipelines, and integration hooks for MLOps workflows are all thoughtfully packaged. From a technical standpoint, it’s rock strong for pc imaginative and prescient, pure language processing, and much more area of interest generative AI use instances.

By way of subscription and licensing, the tiered plans are clear-cut and largely honest given the firepower you’re accessing. The upper-end plans unlock extra aggressive GPU utilization profiles, early entry to updates, and premium assist ranges. In case you’re operating high-scale inference duties or multi-node coaching jobs, these higher tiers are well worth the funding.

That stated, Nvidia AI Enterprise isn’t good. The platform gives strong integration with main frameworks and delivers excessive efficiency for AI workloads. Nonetheless, a standard theme amongst G2 buyer reviewers is the steep studying curve, particularly for these new to the Nvidia ecosystem. That stated, as soon as customers get snug, many discover the workflow extremely environment friendly and the GPU acceleration properly well worth the ramp-up.

The toolset is undeniably complete, supporting all the things from knowledge pipelines to large-scale mannequin deployment. However G2 reviewer insights additionally level out that pricing could be a barrier, notably for smaller groups. Licensing and {hardware} prices add up. That stated, a number of customers additionally observe that the enterprise-grade efficiency justifies the funding when scaled successfully.

Whereas the platform runs reliably beneath load, G2 sentiment evaluation reveals that buyer assist will be inconsistent, particularly for mid-tier plans. Some customers cite delays in resolving points or restricted assist with newer APIs. Nonetheless, enhancements in documentation and frequent ecosystem updates counsel Nvidia is actively working to shut these gaps, one thing a couple of G2 customers have referred to as out positively.

Regardless of these challenges, Nvidia AI Enterprise delivers the place it issues: pace, scalability, and enterprise-ready AI. In case you’re constructing critical AI merchandise, it’s a powerful accomplice, simply count on a little bit of a studying curve and upfront funding.

What I like about Nvidia AI Enterprise:

- Working with Nvidia is like having a full toolbox for AI growth, with all the things you want from mannequin preparation to AI deployment.

- Nvidia AI Enterprise is optimized for GPU efficiency, complete AI instruments, enterprise-grade assist, and seamless integration with present AI infrastructure.

What do G2 Customers like about Nvidia AI Enterprise:

“It is like having a full toolbox for AI growth, with all the things you want from knowledge preparation to mannequin deployment. Plus, the efficiency increase you get from NVIDIA GPUs is improbable! It is like having a turbocharger on your AI tasks.”

– Nvidia AI Enterprise Evaluation, Jon Ryan L.

What I dislike about Nvidia AI Enterprise:

- The price of licensing and required {hardware} will be fairly excessive, doubtlessly making it much less accessible for smaller companies.

- This platform is very optimized particularly for Nvidia GPUs, which may restrict flexibility if you wish to use different {hardware} with the instrument.

What do G2 customers dislike about Nvidia AI Enterprise:

“If you do not have an NVIDIA GPU or DPU, then you definately want some further on-line accessible assets to configure it and use it; the {hardware} with highly effective assets is a should.”

– Nvidia AI Enterprise Evaluation, Muazam Bokhari S.

6. Saturn Cloud: Finest for scalable Python and AI growth

Saturn Cloud is an AI/ML platform that helps knowledge groups and engineers construct, handle, and deploy their AI/ML functions in multi-cloud, on-prem, or hybrid environments.

With Saturn Cloud, you possibly can simply arrange a fast testing setting for brand spanking new instrument concepts, options, and integrations, and run hit and trials on your personalized functions.

Based mostly on G2 evaluate knowledge, Saturn Cloud has constantly skilled a excessive satisfaction charge of 64% amongst patrons. 100% of customers suggest it for options like optimizing AI effectivity and high quality of AI documentation throughout enterprise segments, giving it a ranking of 4 out of 5 primarily based on their expertise with the instrument.

I’ve been utilizing Saturn Cloud for some time now, and actually, it’s been superb for scaling up my knowledge science and machine studying workflows. Proper from the get-go, the onboarding expertise was easy. I didn’t want a bank card to attempt it out, and spinning up a JupyterLab pocket book with entry to each CPUs and GPUs took lower than 5 minutes.

What actually stood out to me was how seamlessly it integrates with GitHub and VS Code over a safe shell (SSH) layer. I by no means should waste time importing recordsdata manually; it simply works.

One of many first issues I appreciated was how beneficiant the free tier is in comparison with different platforms. With ample disk area and entry to CPU (and even restricted GPU!) computing, it felt like I might do critical work with out consistently worrying about useful resource limits. After I enrolled in a course, I used to be even granted extra hours after a fast chat with their responsive assist group by way of Intercom.

Now, let’s discuss efficiency. Saturn Cloud offers you a buffet of ready-to-go environments full of the most recent variations of deep studying and knowledge science libraries. Whether or not I’m coaching deep studying fashions on a GPU occasion or spinning up a Dask cluster for parallel processing, it’s extremely dependable and surprisingly quick.

Their platform is constructed to be versatile too; you get a one-click federated login, {custom} Docker pictures, and autoscaling workspaces that shut down routinely to save lots of credit (and sanity).

The premium plans deliver much more horsepower. You may select from an array of occasion varieties (CPU-heavy, memory-heavy, or GPU-accelerated) and configure high-performance Dask clusters with only a few clicks. It’s additionally refreshing how clearly they lay out their pricing and utilization, no sneaky charges like on some cloud platforms.

For startups and enterprise groups alike, the power to create persistent environments, use personal Git repos, and handle secrets and techniques makes Saturn Cloud a viable different to AWS SageMaker, Google Colab Professional, or Azure ML.

That stated, it’s not with out flaws. Whereas many customers reward how shortly they’ll get began, some G2 reviewers famous that the free tier timer could be a bit too aggressive, ending classes mid-run. Nonetheless, for a platform that doesn’t even require a bank card to launch GPU cases, that tradeoff feels manageable.

Most G2 buyer reviewers discovered the setup to be easy, particularly with prebuilt environments and intuitive scaling. Nevertheless, a couple of bumped into hiccups when coping with OpenSSL variations or managing secrets and techniques. That stated, as soon as configured, the system delivers dependable and highly effective efficiency throughout workloads.

The flexibleness to run something from Jupyter notebooks to full Dask clusters is an enormous plus. A handful of G2 consumer insights talked about that containerized workflows will be tough to deploy because of the Docker backend, however the platform’s customization choices assist offset that.

Whereas onboarding is mostly quick, some G2 reviewers felt the platform might use extra tutorials, particularly for cloud inexperienced persons. That stated, when you get accustomed to the setting, it actually clears the trail for experimentation and critical ML work.

What I like about Saturn Cloud:

- Saturn Cloud is straightforward to make use of and has a responsive customer support group by way of built-in intercom chat.

- Saturn Cloud runs on a distant server even when the connection is misplaced. You may entry it once more when you could have an web connection.

What do G2 Customers like about Saturn Cloud:

“Nice highly effective instrument with all wanted Python Information Science libraries, fast Technical Assist, versatile settings for servers, nice for Machine Studying Initiatives, GPU, and sufficient Operational reminiscence, very highly effective user-friendly Product with sufficient assets.”

– Saturn Cloud Evaluation, Dasha D..

What I dislike about Saturn Cloud:

- I want common customers had extra assets accessible, like extra GPUs per 30 days, as sure fashions require far more than a few hours to coach.

- One other downside is that the storage space is just too small to add massive datasets. In accordance with G2 reviewers, there’s often not sufficient area to save lots of the processed datasets.

What do G2 customers dislike about Saturn Cloud:

“Whereas the platform excels in lots of areas, I’d like to see extra of a range in unrestricted Massive Language Fashions available. Though you possibly can construct them in a contemporary VM, it could be good to have pre-configured stacks to save lots of effort and time.”

– Saturn Cloud Evaluation, AmenRey N.

Finest Generative AI Infrastructure Software program: Regularly Requested Questions (FAQs)

1. Which firm gives probably the most dependable AI Infrastructure instruments?

Based mostly on the highest generative AI infrastructure instruments coated on this challenge, AWS stands out as probably the most dependable as a consequence of its enterprise-grade scalability, in depth AI/ML providers (like SageMaker), and strong world infrastructure. Google Cloud additionally ranks extremely for its robust basis fashions and integration with Vertex AI.

2. What are the highest Generative AI Software program suppliers for small companies?

High generative AI software program suppliers for small companies embody OpenAI, Cohere, and Author, due to their accessible APIs, reasonably priced pricing tiers, and ease of integration. These instruments provide robust out-of-the-box capabilities with out requiring heavy infrastructure or ML experience.

3. What’s the finest Generative AI Infrastructure for my tech startup?

For a tech startup, Google Vertex AI and AWS Bedrock are prime selections. Each provide scalable APIs, entry to a number of basis fashions, and versatile pricing. OpenAI’s platform can be glorious in case you prioritize speedy prototyping and high-quality language fashions like GPT-4.

4. What’s the perfect Generative AI Platform for app growth?

Google Vertex AI is the perfect generative AI platform for app growth due to its seamless integration with Firebase and robust assist for {custom} mannequin tuning. OpenAI can be a prime choose for fast integration of superior language capabilities by way of API, ultimate for chatbots, content material era, and user-facing options.

5. What’s the most advisable Generative AI Infrastructure for software program corporations?

AWS Bedrock is probably the most advisable generative AI infrastructure for software program corporations due to its mannequin flexibility, scalability, and enterprise-grade tooling. Google Vertex AI and Azure AI Studio are additionally extensively used due to their strong MLOps assist and integration with present cloud ecosystems.

6. What AI Infrastructure does everybody use for service corporations?

For service corporations, OpenAI, Google Vertex AI, and AWS Bedrock are probably the most generally used AI infrastructure instruments. They provide plug-and-play APIs, assist for automation and chat interfaces, and simple integration with CRM or customer support platforms, making them ultimate for scaling client-facing operations.

7. What’s the most effective AI Infrastructure Software program for digital providers?

Essentially the most environment friendly AI infrastructure software program for digital providers is OpenAI for its highly effective language fashions and simple API integration. Google Vertex AI can be extremely environment friendly, providing scalable deployment, mannequin customization, and easy integration with digital workflows and analytics instruments..

8. What are the perfect choices for Generative AI Infrastructure within the SaaS business?

For the SaaS business, the perfect generative AI infrastructure choices are AWS Bedrock, Google Vertex AI, and Azure AI Studio. These choices provide scalable APIs, multi-model entry, and safe deployment. Databricks can be robust for SaaS groups managing massive consumer knowledge pipelines and coaching {custom} fashions.

9. What are the perfect Generative AI toolkits for launching a brand new app?

The most effective generative AI toolkits for launching a brand new app are OpenAI for quick integration of language capabilities, Google Vertex AI for {custom} mannequin coaching and deployment, and Hugging Face for open-source flexibility and prebuilt mannequin entry. These platforms steadiness pace, customization, and scalability for brand spanking new app growth.

Higher infra, higher AI effectivity

Earlier than you shortlist the perfect generative AI infrastructure answer on your groups, consider your corporation objectives, present assets, and useful resource allocation workflows. Some of the defining features of generative AI instruments is their capability to combine with present legacy programs with out inflicting any compliance or governance overtrain.

With my analysis, I additionally discovered that reviewing authorized AI content material insurance policies and vendor complexity points for generative AI infrastructure options is necessary to make sure you do not put your knowledge in danger. When you are evaluating your choices and in search of {hardware} — and software-based options, be at liberty to return again to this listing and get knowledgeable recommendation.

Trying to scale your inventive output? These prime generative AI instruments for 2025 are serving to entrepreneurs produce smarter, quicker, and higher content material.