AI is creating worth for everybody — from researchers in drug discovery to quantitative analysts navigating monetary market modifications.

The sooner an AI system can produce tokens, a unit of information used to string collectively outputs, the higher its affect. That’s why AI factories are key, offering essentially the most environment friendly path from “time to first token” to “time to first worth.”

AI factories are redefining the economics of recent infrastructure. They produce intelligence by reworking information into priceless outputs — whether or not tokens, predictions, photos, proteins or different kinds — at huge scale.

They assist improve three key facets of the AI journey — information ingestion, mannequin coaching and high-volume inference. AI factories are being constructed to generate tokens sooner and extra precisely, utilizing three important expertise stacks: AI fashions, accelerated computing infrastructure and enterprise-grade software program.

Learn on to find out how AI factories are serving to enterprises and organizations world wide convert essentially the most priceless digital commodity — information — into income potential.

From Inference Economics to Worth Creation

Earlier than constructing an AI manufacturing facility, it’s necessary to grasp the economics of inference — the right way to steadiness prices, vitality effectivity and an rising demand for AI.

Throughput refers back to the quantity of tokens {that a} mannequin can produce. Latency is the quantity of tokens that the mannequin can output in a selected period of time, which is usually measured in time to first token — how lengthy it takes earlier than the primary output seems — and time per output token, or how briskly every further token comes out. Goodput is a more moderen metric, measuring how a lot helpful output a system can ship whereas hitting key latency targets.

Person expertise is essential for any software program software, and the identical goes for AI factories. Excessive throughput means smarter AI, and decrease latency ensures well timed responses. When each of those measures are balanced correctly, AI factories can present partaking consumer experiences by rapidly delivering useful outputs.

For instance, an AI-powered customer support agent that responds in half a second is way extra partaking and priceless than one which responds in 5 seconds, even when each finally generate the identical variety of tokens within the reply.

Firms can take the chance to put aggressive costs on their inference output, leading to extra income potential per token.

Measuring and visualizing this steadiness could be tough — which is the place the idea of a Pareto frontier is available in.

AI Manufacturing unit Output: The Worth of Environment friendly Tokens

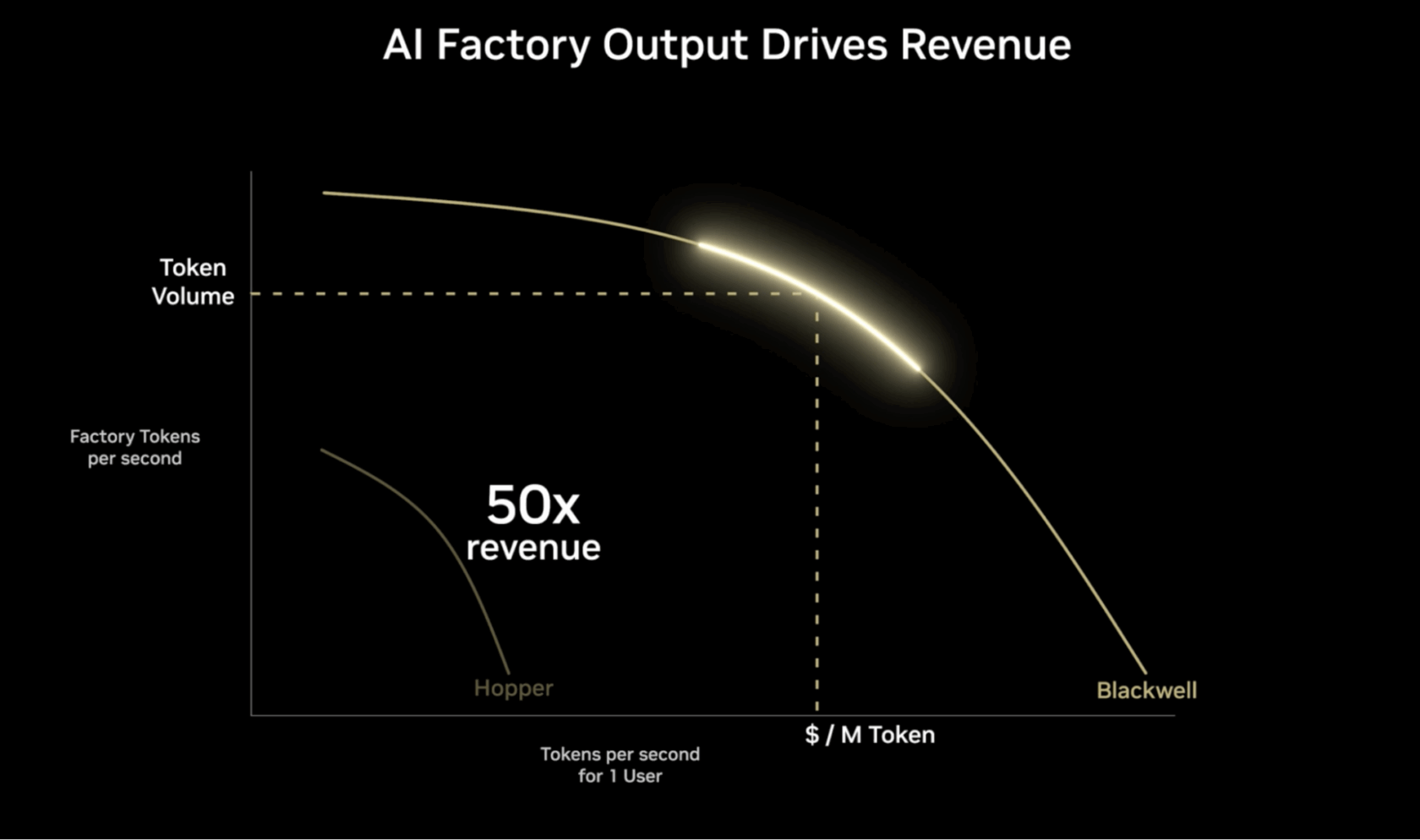

The Pareto frontier, represented within the determine under, helps visualize essentially the most optimum methods to steadiness trade-offs between competing targets — like sooner responses vs. serving extra customers concurrently — when deploying AI at scale.

The vertical axis represents throughput effectivity, measured in tokens per second (TPS), for a given quantity of vitality used. The upper this quantity, the extra requests an AI manufacturing facility can deal with concurrently.

The horizontal axis represents the TPS for a single consumer, representing how lengthy it takes for a mannequin to provide a consumer the primary reply to a immediate. The upper the worth, the higher the anticipated consumer expertise. Decrease latency and sooner response instances are usually fascinating for interactive purposes like chatbots and real-time evaluation instruments.

The Pareto frontier’s most worth — proven as the highest worth of the curve — represents the very best output for given units of working configurations. The purpose is to seek out the optimum steadiness between throughput and consumer expertise for various AI workloads and purposes.

The very best AI factories use accelerated computing to extend tokens per watt — optimizing AI efficiency whereas dramatically rising vitality effectivity throughout AI factories and purposes.

The animation above compares consumer expertise when working on NVIDIA H100 GPUs configured to run at 32 tokens per second per consumer, versus NVIDIA B300 GPUs working at 344 tokens per second per consumer. On the configured consumer expertise, Blackwell Extremely delivers over a 10x higher expertise and virtually 5x increased throughput, enabling as much as 50x increased income potential.

How an AI Manufacturing unit Works in Apply

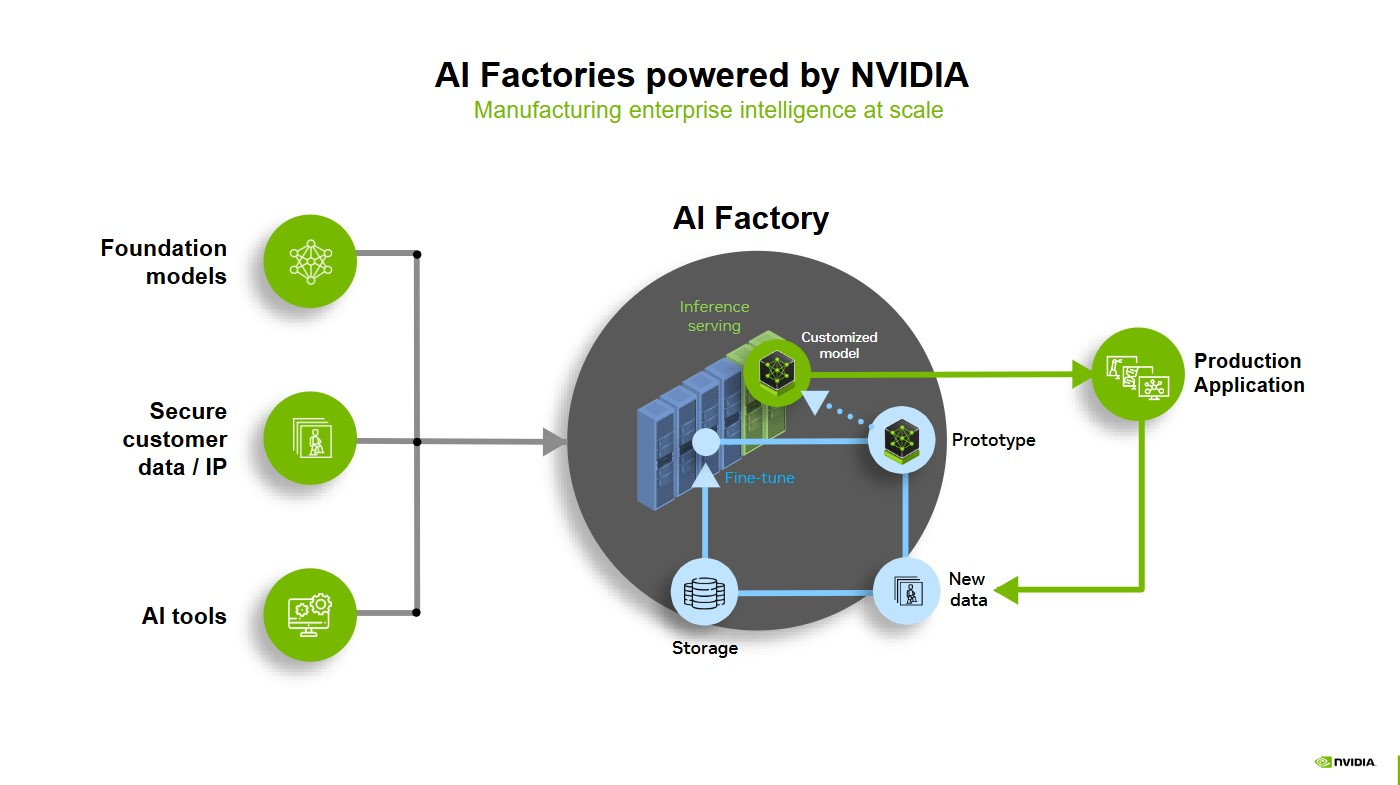

An AI manufacturing facility is a system of elements that come collectively to show information into intelligence. It doesn’t essentially take the type of a high-end, on-premises information middle, however might be an AI-dedicated cloud or hybrid mannequin working on accelerated compute infrastructure. Or it might be a telecom infrastructure that may each optimize the community and carry out inference on the edge.

Any devoted accelerated computing infrastructure paired with software program turning information into intelligence by way of AI is, in follow, an AI manufacturing facility.

The elements embrace accelerated computing, networking, software program, storage, techniques, and instruments and providers.

When an individual prompts an AI system, the total stack of the AI manufacturing facility goes to work. The manufacturing facility tokenizes the immediate, turning information into small items of which means — like fragments of photos, sounds and phrases.

Every token is put by way of a GPU-powered AI mannequin, which performs compute-intensive reasoning on the AI mannequin to generate the very best response. Every GPU performs parallel processing — enabled by high-speed networking and interconnects — to crunch information concurrently.

An AI manufacturing facility will run this course of for various prompts from customers throughout the globe. That is real-time inference, producing intelligence at industrial scale.

As a result of AI factories unify the total AI lifecycle, this method is constantly enhancing: inference is logged, edge instances are flagged for retraining and optimization loops tighten over time — all with out guide intervention, an instance of goodput in motion.

Main world safety expertise firm Lockheed Martin has constructed its personal AI manufacturing facility to help numerous makes use of throughout its enterprise. By means of its Lockheed Martin AI Heart, the corporate centralized its generative AI workloads on the NVIDIA DGX SuperPOD to coach and customise AI fashions, use the total energy of specialised infrastructure and scale back the overhead prices of cloud environments.

“With our on-premises AI manufacturing facility, we deal with tokenization, coaching and deployment in home,” stated Greg Forrest, director of AI foundations at Lockheed Martin. “Our DGX SuperPOD helps us course of over 1 billion tokens per week, enabling fine-tuning, retrieval-augmented era or inference on our massive language fashions. This resolution avoids the escalating prices and important limitations of charges primarily based on token utilization.”

NVIDIA Full-Stack Applied sciences for AI Manufacturing unit

An AI manufacturing facility transforms AI from a sequence of remoted experiments right into a scalable, repeatable and dependable engine for innovation and enterprise worth.

NVIDIA supplies all of the elements wanted to construct AI factories, together with accelerated computing, high-performance GPUs, high-bandwidth networking and optimized software program.

NVIDIA Blackwell GPUs, for instance, could be related by way of networking, liquid-cooled for vitality effectivity and orchestrated with AI software program.

The NVIDIA Dynamo open-source inference platform gives an working system for AI factories. It’s constructed to speed up and scale AI with most effectivity and minimal value. By intelligently routing, scheduling and optimizing inference requests, Dynamo ensures that each GPU cycle ensures full utilization, driving token manufacturing with peak efficiency.

NVIDIA Blackwell GB200 NVL72 techniques and NVIDIA InfiniBand networking are tailor-made to maximise token throughput per watt, making the AI manufacturing facility extremely environment friendly from each complete throughput and low latency views.

By validating optimized, full-stack options, organizations can construct and keep cutting-edge AI techniques effectively. A full-stack AI manufacturing facility helps enterprises in reaching operational excellence, enabling them to harness AI’s potential sooner and with higher confidence.

Study extra about how AI factories are redefining information facilities and enabling the following period of AI.