NVIDIA is working with corporations worldwide to construct out AI factories — rushing the coaching and deployment of next-generation AI functions that use the most recent developments in coaching and inference.

The NVIDIA Blackwell structure is constructed to fulfill the heightened efficiency necessities of those new functions. Within the newest spherical of MLPerf Coaching — the twelfth because the benchmark’s introduction in 2018 — the NVIDIA AI platform delivered the very best efficiency at scale on each benchmark and powered each end result submitted on the benchmark’s hardest giant language mannequin (LLM)-focused check: Llama 3.1 405B pretraining.

The NVIDIA platform was the one one which submitted outcomes on each MLPerf Coaching v5.0 benchmark — underscoring its distinctive efficiency and flexibility throughout a wide selection of AI workloads, spanning LLMs, suggestion techniques, multimodal LLMs, object detection and graph neural networks.

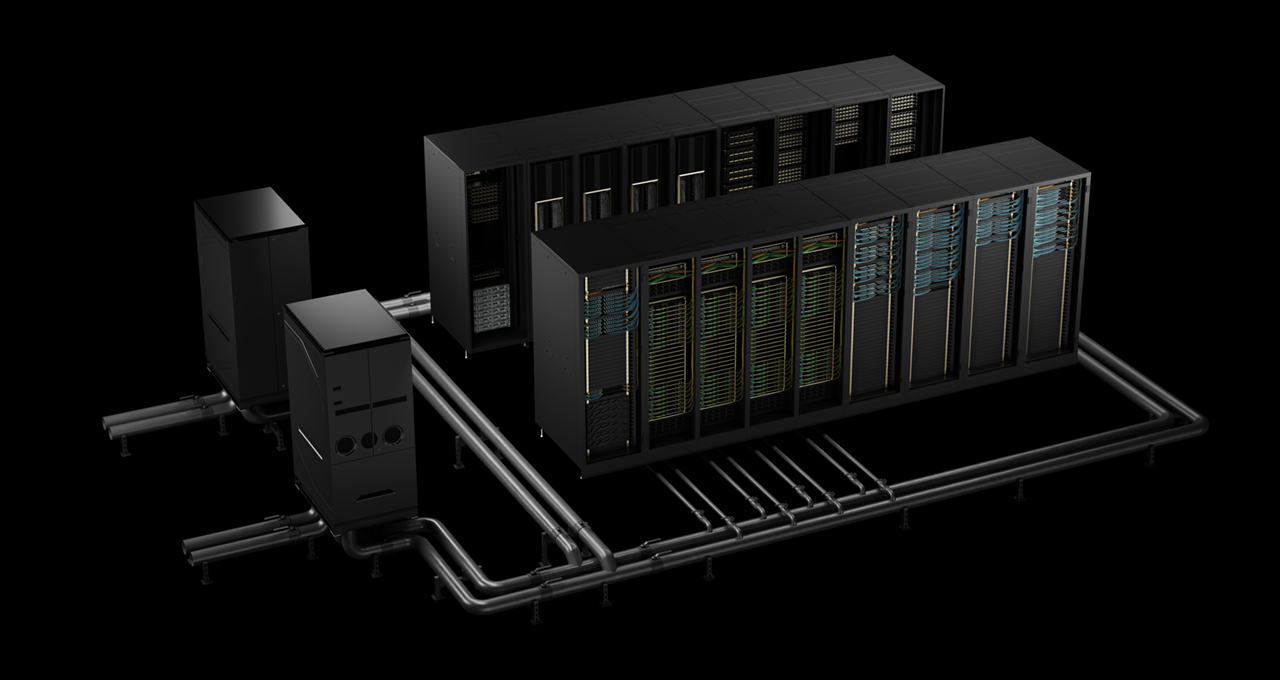

The at-scale submissions used two AI supercomputers powered by the NVIDIA Blackwell platform: Tyche, constructed utilizing NVIDIA GB200 NVL72 rack-scale techniques, and Nyx, primarily based on NVIDIA DGX B200 techniques. As well as, NVIDIA collaborated with CoreWeave and IBM to submit GB200 NVL72 outcomes utilizing a complete of two,496 Blackwell GPUs and 1,248 NVIDIA Grace CPUs.

On the brand new Llama 3.1 405B pretraining benchmark, Blackwell delivered 2.2x larger efficiency in contrast with previous-generation structure on the similar scale.

On the Llama 2 70B LoRA fine-tuning benchmark, NVIDIA DGX B200 techniques, powered by eight Blackwell GPUs, delivered 2.5x extra efficiency in contrast with a submission utilizing the identical variety of GPUs within the prior spherical.

These efficiency leaps spotlight developments within the Blackwell structure, together with high-density liquid-cooled racks, 13.4TB of coherent reminiscence per rack, fifth-generation NVIDIA NVLink and NVIDIA NVLink Change interconnect applied sciences for scale-up and NVIDIA Quantum-2 InfiniBand networking for scale-out. Plus, improvements within the NVIDIA NeMo Framework software program stack elevate the bar for next-generation multimodal LLM coaching, essential for bringing agentic AI functions to market.

These agentic AI-powered functions will at some point run in AI factories — the engines of the agentic AI financial system. These new functions will produce tokens and helpful intelligence that may be utilized to nearly each trade and educational area.

The NVIDIA information heart platform consists of GPUs, CPUs, high-speed materials and networking, in addition to an unlimited array of software program like NVIDIA CUDA-X libraries, the NeMo Framework, NVIDIA TensorRT-LLM and NVIDIA Dynamo. This extremely tuned ensemble of {hardware} and software program applied sciences empowers organizations to coach and deploy fashions extra rapidly, dramatically accelerating time to worth.

The NVIDIA associate ecosystem participated extensively on this MLPerf spherical. Past the submission with CoreWeave and IBM, different compelling submissions have been from ASUS, Cisco, Dell Applied sciences, Giga Computing, Google Cloud, Hewlett Packard Enterprise, Lambda, Lenovo, Nebius, Oracle Cloud Infrastructure, Quanta Cloud Know-how and Supermicro.

Be taught extra about MLPerf benchmarks.